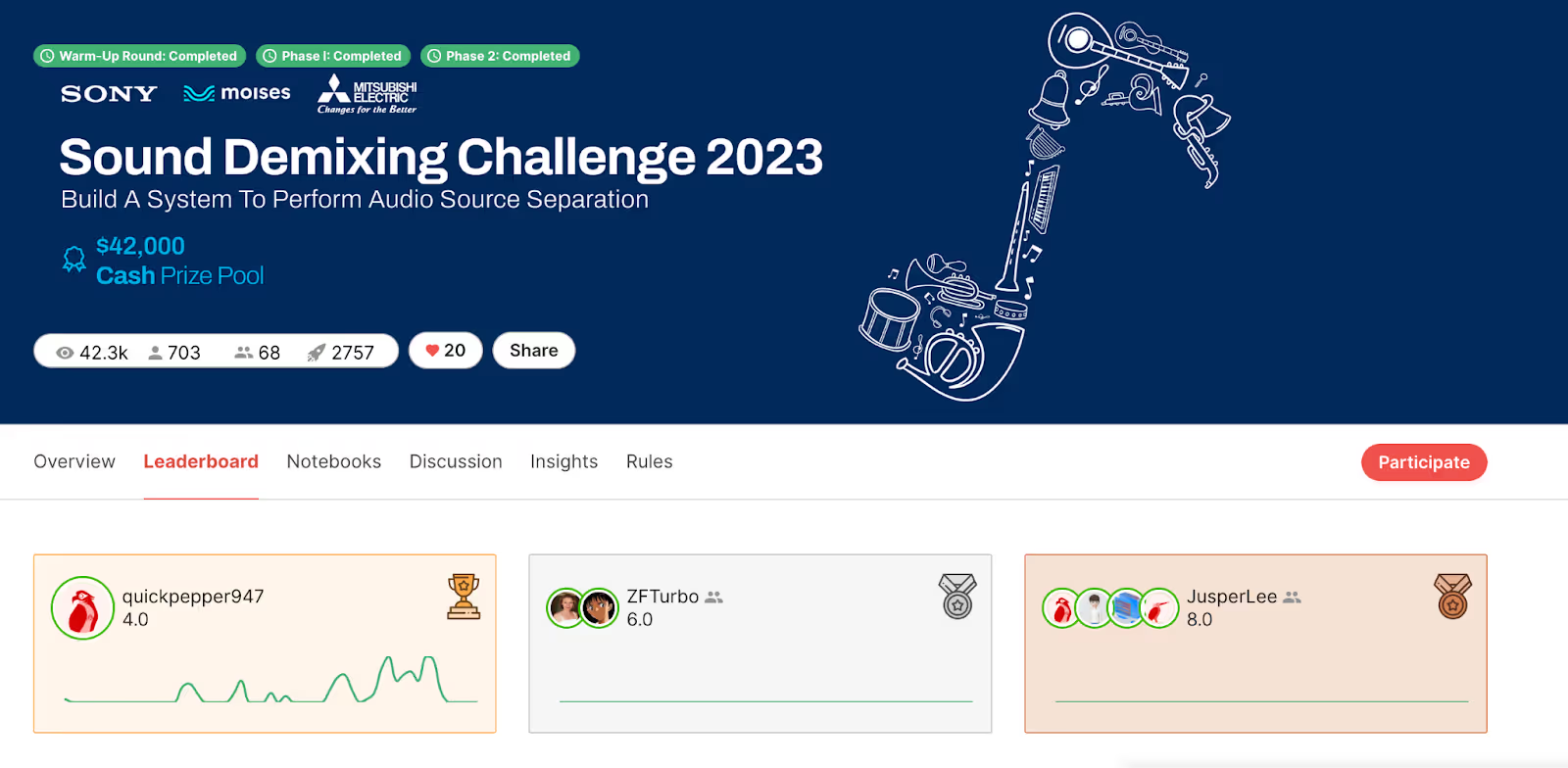

AudioShake wins Sony Demixing 2023

We’re excited to share that AudioShake once again came out tops in the Sony “Demixing Challenge.” In this global contest, participants from around the world — including research institutes, private companies, and open ml-audio communities — submit their AI models to run against the same test set so that their results can be compared by SDR score.

This year, the challenge was divided into two contests–music (separating a recording into 4 instrument stems) and film (separating music, dialogue, and effects). AudioShake placed number one overall in the challenge, with the highest total score across music and film, and the top dialogue score in film.

Following the score tallies, we withdrew from the music competition since one of our researchers helped contribute to its organization (although with no access to competitive information). Our quick taste of glory–along with a painfully large compute bill–will live on in our memories and the Internet archive:

A thought on source separation contests and SDR scores…and new models!

Since our win of the first Demixing challenge, we’ve shifted our team’s measurement approach to source separation, relying less and less on SDR score and the metrics measured in this competition, and more on perceptual metrics. We’ve learned that what can land high SDR scores is not necessarily what best addresses real-world use cases, which can vary depending on the end customer and their needs. Indeed, the research paper released by the organizers reaches a similar conclusion–there is very little correlation between the best metrics and perceptual quality. For example, Bytedance, which had the highest-scoring model for SDR, came in third place in some of the perceptual tests. This poses an interesting challenge to us as a field to consider how we can evolve our evaluation metrics.

Having said that, returning to the Challenge and SDR scores was a fun way to try some new approaches, and gain new insights. We’ve taken our competition models–enhanced with additional work, not constrained to the limits of SDR score–and are releasing them to the public. They’re now available via our API and platforms–including our platform for indie artists, AudioShake Indie.

What most excites us about the continued enthusiasm for this space, is the interaction of source separation with the real world, potentially opening up audio to new kinds of experiences. It’s great to see so many people active in the field of music information retrieval, particularly when we consider the broad applications, from music and entertainment experiences, to hearing aids, haptics, and other audio experiences. Having more researchers tackle these challenges offers the possibility to address real problems that affect people in their daily lives.